Are you looking for how to master Prompt Engineering Strategies for optimal results? Are you ready to embark on a journey that will give you the essential skills to harness the true potential of large language models (LLMs) like ChatGPT?

Congratulation! You have found what you are looking for.

In this comprehensive guide to prompt engineering, led by the esteemed instructor Digital Wealth Guru, you will delve deep into prompt engineering.

Moreover, you’ll master this skill even with no coding background.

Let’s begin this educational adventure by thoroughly understanding prompt engineering, why it holds immense significance, and how it emerged in the age of artificial intelligence (AI).

What Is Prompt Engineering?

Prompt engineering, at its core, is a profession that emerged alongside the ascent of artificial intelligence. Its primary focus lies in the human-driven process of crafting, refining, and optimizing prompts in a meticulously structured manner.

The ultimate objective?

To perfect the interaction between humans and AI to an unprecedented level of excellence. However, the role of prompt engineers extends beyond the initial crafting phase. These experts are also entrusted with continuously monitoring prompts, ensuring their efficacy as AI evolves.

Furthermore, maintaining an up-to-date prompt library is a fundamental requirement for prompt engineers, alongside the responsibility of reporting their findings and establishing themselves as thought leaders in this rapidly evolving field.

The Evolution of Artificial Intelligence (AI)

Before delving into the intricacies of prompt engineering, it’s essential to clarify the concept of AI. Artificial intelligence essentially simulates human intelligence processes through machines, but it’s imperative to note that, as of now, AI lacks true sentience.

For many AI tools, including ChatGPT, when the term “AI” is mentioned, it often refers to machine learning. Machine learning operates by extensively analyzing training data to identify correlations and patterns.

These patterns are subsequently utilized to predict outcomes based on the training data provided.

To illustrate, consider a scenario where we feed data indicating that a paragraph with specific characteristics should be categorized as “international finance.”

With the right coding, we can train our AI model to predict the categories of future paragraphs accurately. While this is a simplified example, it illustrates the core concept of machine learning, which is fundamentally data-driven pattern recognition.

The Power of Machine Learning

The utility of machine learning lies in its remarkable ability to make sense of extensive datasets, enabling the prediction of outcomes based on recognized patterns.

Whether you’re a novice or aspiring to gain a deeper understanding of machine learning, grasping its fundamental principles is paramount.

Suppose you’re interested in creating your own AI models and want to explore the concept of machine learning as a beginner. In that case, I highly recommend reading our introductory article on this blog, “Prompt Engineering: The Ultimate Guide To Boost Your AI Tool Skills.”

Why Prompt Engineering Matters

Prompt engineering has risen in prominence due to the rapid proliferation of AI. Even the creators of AI grapple with controlling its outputs.

Consider this scenario: when you pose a straightforward math question to an AI chatbot, such as “What is four plus four?” you naturally expect an unequivocal response of “eight.”

However, complexities arise when AI responses influence the learning experiences of individuals.

Imagine being a teenage student trying to learn English and receiving inconsistent responses from AI.

With the right prompts, we can harness the potential of AI to provide tailored, high-quality responses. To illustrate this concept further, let’s examine an example using ChatGPT’s GPT-4 model.

Crafting Effective ChatGPT Prompts

To demonstrate the transformative power of prompts, let’s begin by correcting a poorly written paragraph. The initial response from AI leaves substantial room for improvement.

However, with a well-crafted prompt, we can metamorphose this interaction into an engaging and educational experience.

By instructing AI to assume the role of a spoken English teacher, correct grammar mistakes and typos, and ask questions, we create a dynamic and interactive dialogue. This serves as a tangible example of how prompts can guide AI to deliver meaningful and relevant results, thereby making the learning process more effective and engaging.

The Role of Linguistics

Linguistics, the scientific study of language, is pivotal in prompt engineering. Understanding the intricate nuances of language and universally accepted grammar structures is fundamental to crafting effective prompts.

AI models rely heavily on standardized language and grammar, making adherence to these conventions crucial for optimizing outcomes.

The World of Language Models

Imagine a world where computers effortlessly comprehend and generate human language. Welcome to the realm of language models.

These digital wizards are engineered to understand and generate human-like text by learning from vast collections of written text.

They analyze sentence structures, word meanings, and contextual relationships to generate coherent and contextually accurate responses. Language models find applications across various domains, including virtual assistants, chatbots, and creative writing.

They enhance numerous aspects of our digital lives by assisting in information retrieval, offering suggestions, and generating content.

It’s important to note that while language models possess extraordinary capabilities, their effectiveness hinges on the human component responsible for creating and training them.

In essence, they represent a fusion of human ingenuity and the power of algorithms, combining the best of both worlds.

A Journey Through Language Models

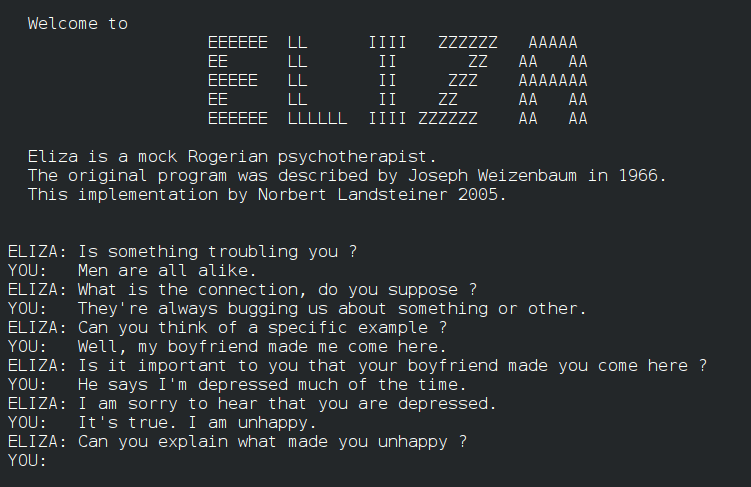

The history of language models stretches back to the 1960s with the advent of Eliza, a pioneering natural language processing program. Eliza was designed to simulate human conversations, specifically mimicking the role of a Rogerian psychotherapist.

It excelled at listening attentively and posing probing questions to guide individuals in exploring their thoughts and emotions.

Eliza’s secret weapon was its mastery of pattern matching, boasting a repository of predefined patterns, each associated with specific responses. These patterns functioned like magical spells, enabling Eliza to understand and provide responses to human language effectively.

When conversing, Eliza would meticulously analyze input, seeking patterns and keywords. Subsequently, it would transform the user’s words into a series of symbols and search for patterns matching those symbols in its extensive repertoire.

Once a pattern was detected, Eliza would work magic by transforming the user’s words into a question or statement to delve deeper into their thoughts and emotions. Importantly, Eliza didn’t genuinely comprehend the user’s input. Instead, it created the illusion of understanding through pattern matching and predefined rules.

Nevertheless, users often found themselves captivated by its conversational abilities, feeling heard and understood, even though they were conversing with a machine. It was akin to having a digital confidant, always ready to listen and offer gentle guidance.

Eliza’s creator, Joseph Weisenbaum, designed the program to explore communication between humans and machines. He was surprised when individuals, including his secretary, attributed human-like feelings to the computer program.

Eliza’s impact was profound, igniting interest and research in natural language processing and setting the stage for more advanced systems capable of genuinely understanding and generating human language fast forward to the 1970s, when a program named Shudlu appeared.

Although not a traditional language model, Shudlu could understand simple commands and interact with a virtual world of blocks, laying the groundwork for machines to comprehend human language.

The era of true language models began around 2010 with the emergence of deep learning and neural networks.

Enter the mighty GPT, short for generative pre-trained transformer, poised to revolutionize the world of language models. In 2018, GPT-1 arrived, representing the first iteration in the GPT series by OpenAI. This early model was trained on a substantial amount of text data, absorbing knowledge from books, articles, and a significant portion of the internet.

While GPT-1 was impressive, it paled compared to its descendants that we rely on today. As time progressed, GPT-2 debuted in 2019, followed by the remarkable GPT-3 in 2020. GPT-3, boasting over 175 billion parameters, stunned the world with its unparalleled ability to understand, respond, and even generate creative writing pieces.

Its arrival marked a pivotal moment in language models and AI. As of the time of writing, GPT-4 has emerged, trained on an extensive dataset encompassing a substantial portion of the internet. Additionally, models like BERT from Google have also entered the landscape.

We are only at the beginning of exploring the vast potential of language models and AI. Learning how to harness this potential through prompt engineering is a smart move in today’s rapidly evolving technological landscape.

The Prompt Engineering Mindset

To excel in prompt engineering, it’s essential to cultivate the right mindset. Think of it as a way to write just one prompt and avoid wasting time and tokens on numerous attempts until you achieve the desired result. This mindset is akin to becoming adept at crafting effective Google searches.

Over the years, your Google search skills have likely improved significantly, allowing you to find what you need more efficiently on your first try. This same mindset can be applied to prompt engineering. As Mahail Eric of the Infinite Machine Learning Podcast aptly says, “Prompting is akin to designing effective Google searches.

There are better and worse ways to write queries against the Google search engine to accomplish your task.” This variance exists due to the opaqueness of what Google does behind the scenes. This analogy will serve as a guiding principle throughout the course, ensuring you approach prompt engineering with precision and efficiency.

Using ChatGPT by OpenAI

As part of this course, we will utilize ChatGPT’s GPT-4 model for our examples. To follow along and gain a comprehensive understanding of the platform, you can sign up on openai.com. I will guide you through the platform’s usage, ensuring you are comfortable and proficient in interacting with ChatGPT.

Understanding AI Tokens

Tokens are a fundamental concept in using ChatGPT, and it’s possible to encounter token limits during interactions. GPT-4 processes text in token chunks, where a token is approximately equivalent to four characters or 0.75 English words.

Users are billed based on the number of tokens used, making it crucial to have a clear understanding of token usage. I will demonstrate how to keep track of tokens within the platform, ensuring you can optimize your interactions effectively.

Best Practices For Prompt Engineering Strategies

The biggest misconception about prompt engineering is that it’s an easy job without science. I imagine many people think it’s just about constructing a one-off sentence such as “correct my paragraph,” which we saw in the previous example.

When you start to look at it, creating effective prompts relies on many different factors. Here are some things to consider when writing a good prompt:

- Consider writing clear instructions with details in your query. Consider adopting a persona and specifying the format using iterative prompting. If you have a multi-part question or the first response wasn’t sufficient, you can continue by asking follow-up questions or asking the model to elaborate and avoid leading the answer.

- Try not to make your prompts so leading that they inadvertently tell the model what answer you expect. This might bias the response unduly.

- Limit the scope for long topics. If you’re asking about a broad topic, it’s helpful to break it down or limit the scope to get a more focused answer.

Let’s look at some of these now. To write clearer instructions, we can adopt writing more details in our queries. And to get the best results, don’t assume the AI knows what you are talking about. Writing something like, “When is the election?” implies that you expect the AI to know what election you are talking about and what country you mean.

This may result in you asking a few follow-up questions to get the desired result, resulting in time loss and, frankly, some frustration.

Consider taking the time to write a prompt with clear instructions.

So, for example, instead of writing, “When is the election?” you could write, “When is the next presidential election for Poland?”

So, let’s go ahead and run this. And this will be much more precise and knows exactly what we are asking about. It’s not going to go guessing and waste our time as well as waste our resources. So, in other words, tokens that we are using, in other words, money, to get the right answer the first time.

Here are some other examples of how you could write clearer prompts. So, for example, we have this prompt here, which says, “Write code to filter out the ages from data.” And if you run it, you don’t know what language it will come back with, let’s see.

So, for example, here it’s using Python. I didn’t want to use Python, okay? So now we’ve lost some tokens asking this. We’ve also lost some time, and we just haven’t got the right response. This could have been easily avoided. So, I will stop this from generating and let’s try again.

So this time, let’s be more specific by writing, “Write a JavaScript function that will take an array of objects and filter out the value of age property and put them in a new array. Please explain what each code snippet does.” In this example, I am not assuming the AI knows what computer language I like to use, and I am being more specific about what my data looks like. On this occasion, it’s an array of objects.

Not only that, I’m also asking the AI to explain why it’s doing each step so that I, in turn, can understand and not just copy-paste the code without gaining any knowledge from it.

Okay, here you can see a live example of what’s coming back to us from GPT-4. It’s given us the correct code. So I have checked that, and I was also giving us an example of how you would use the function, which I didn’t ask, which is super useful. It’s gone above and beyond to help me understand what is happening.

Let’s look at another example.

We can write, “Tell me what this essay is about.” So I’m going to type this and then just paste it into an essay and hit go. And then ChatGPT will do its thing. It’s going to give me a summarization as it is best. So, on this occasion, it is essentially giving me numbered points about what this essay is about.

They’re long. I didn’t want to read this much. It’s pretty much looking to be the same as the original essay. So, this is not something I wanted. I should have been way more specific in telling it what I need.

What I’m going to do is add to this conversation. It’s going to learn from what I wrote previously. And I’m just going to specify to use bullet points to explain what this essay is about, making sure each point is no longer than ten words long.

I am being super specific in providing the instructions of what I want, and let’s hit go. So now this is a lot shorter. Okay, as you can see, each point is no longer than ten words long. And then, I’m going to get a summary that is a bit longer and will give me a summary of the essay that I pasted above.

Great, of course, you can do this on any essay or piece of text that you wish, and you can set your own clear and specific instructions as well. I hope you can see why the second one is better. It’s because I am not assuming the AI knows what format I want the summarization of the essay to be in.

I am being specific in that I want very short notes on the essay in bullet point format with a short conclusion at the end. If I had not put this, the summary could have been just as long as the essay itself, and the prompt could have been considered useless in my eyes.

Next up, we can also adopt a persona.

When writing prompts, it is sometimes helpful to create a persona. You’re asking the AI to respond to you and a certain character.

Exactly like the English language teacher’s example we saw earlier, using a persona in prompt engineering can help ensure that the language model’s output is relevant, useful, and consistent with the preferences and needs of the target audience, making it a powerful tool for developing effective language models that meet the needs of users.

Let’s look at some examples of adopting a persona.

So, for example, you’ll have this prompt right here. “Write a poem for a sister’s high school graduation that will be read to family and close friends.”

So let’s go ahead and run this, and let’s see what comes back. It is quite good in a room filled with kin and close ties. We gathered to honor the mist in our eyes, for a journey has ended and another begun as our dear sister steps into the sun.

It’s coming out with a poem I can see here. It is quite a good one, I guess, probably better than anything that I would have written. And it is maybe a little bit generic. Maybe that’s what you wanted. I think we can do better than this.

So let’s try this. This time, I’m going to write a prompt with a persona. So this time, I will specify who I’m writing as. I’m going to write a poem about Helena.

Helena is 25 years old and an amazing writer. Her writing style is similar to the famous 21st-century poet Rupi Kaur. Writing as Helena writes a poem for her 18-year-old sister to celebrate her sister’s high school graduation. High school graduation will be read to friends and family at the gathering.

Okay, so let’s check it out now. We’re writing as Helena, she’s 25, an amazing writer, and we’ve also assigned a writing style. Now, ChatGPT should be using anything it knows about Rupi Kaur, hopefully from the internet, to apply that style to this poem.

This is maybe a little bit more affectionate. We’ve said sister; it’s a younger sister, so the words little sister are being used. In general, I think this is a much higher quality poem, and if Helena truly does have the style of Rupi Kaur in writing, it will be almost indistinguishable who wrote this poem, ChatGPT or Helena.

So here we go, here’s the full thing. It starts, “In the garden of our youth, I watch you bloom from bud to blossom, from child to woman, 18 summers passed.” Again, utilizing the fact that we fed it, she was 18, and every winter’s chill only made you stronger, a force of nature still. So yes, in my eyes, this poem is a lot better, it’s much more refined, it’s much more personal, thanks to the prompts that we wrote.

We’ve already briefly looked at specifying the format when we limited the word count of our bullet points in a previous example. That was a great example; limiting words is one that I use often. However, we can do many other things, including specifying if something is a summary, a list, or a detailed explanation.

Zero-Shot and Few-Shot Prompting

Now that we looked at some best practices, let’s move on to more advanced topics in prompt engineering. In this section, I’m going to talk about two types of prompting we can do: zero-shot prompting and few-shot prompting.

Zero-Shot Prompting:

Zero-shot prompting leverages a pre-trained model’s understanding of words and concept relationships without further training. In the context of the GPT-4 model, we don’t need to do much.

We are already using all of the data that it has to ask questions like “When is Christmas in America?” So, let’s go ahead and do some zero-shot prompting. “When is Christmas in America?”.

So, as you can see, zero-shot prompting refers to querying models like GPT without explicit training examples for the task at hand. In the context of machine learning, zero-shot typically means that a model performs a task without having seen any examples of that task during its training.

Few-Shot Prompting:

Few-shot prompting enhances the model with training examples via the prompt, avoiding retraining. So, instead of zero examples, we give it a tiny bit of data. So let’s think, what would GPT-4 not know? I guess it would not know my favorite types of food.

For example, check, “What is Ania’s favorite type of food?” Plural, okay. And I mean, it can guess, but no, it’s just telling me that it doesn’t know. So that is fine; let’s stop generating. So now let’s feed it in some example data. I’m going to feed it in a tiny bit of data. “Ania’s favorite type of food includes, sorry about my English. Let’s go with burgers, fries, I love fries, pizza,” and hit enter, okay?

So, we are essentially giving ChatGPT some information. So now, if I type, “What restaurant should I take Ania to in Dubai this weekend?” and hit here, it should hopefully understand that my favorite types of foods are burgers, fries, and pizza, and given that, find me some restaurants in Dubai that I would like to go to.

Here are some; this has been updated as of September 2021, but you know, these are pretty good ones. So I would go to these. This is a great example of few-shot prompting in which it wouldn’t have been able to answer this question if I hadn’t given it some example data or just trained the model a little bit more to get the response that I wanted.

AI Hallucinations:

Now we’re delving into something you probably never thought you’d hear in an AI context, and that’s hallucinations. So, what exactly are AI hallucinations? And no, they’re not when your AI assistants start seeing unicorns and rainbows.

AI Hallucinations is actually a term that refers to the unusual outputs that AI models can produce when they misinterpret data. A prime example of this is Google’s Deep Dream.

Deep Dream is an experiment that visualizes the patterns learned by a neural network. It is built to overinterpret and enhance the patterns it sees in an image, or other words, fill in the gaps with images. But sometimes those gaps can be filled with the wrong thing.

This is an example of an unusual output that AI models can produce when they misinterpret data. Now, why do these hallucinations happen, you ask? They’re trained on a huge amount of data, and they make sense of new data based on what they’ve seen before.

Sometimes, however, they make connections that are, let’s call it creative. And voila, an AI hallucination occurs. Despite their funny results, AI hallucinations aren’t just entertaining. They’re also quite enlightening. They show us how our AI models interpret and understand data. It’s like a sneak peek into their thought processes.

Vectors/Text Embeddings:

To finish off, I will leave you with a slightly more complex subject. We will take a quick look at the topic of text embedding and vectors.

Natural Language Processing or NLP and Machine Learning, text embedding is a popular technique to represent textual information in a format that algorithms, especially deep learning models, can easily process.

LLM embedding in prompt engineering means putting prompts in a way that the model can understand and use. For this, the word prompt needs to be turned into a high-dimensional vector that holds semantic data. So essentially, the word “food” is represented by this. If using the create embedding API from OpenAI.

Okay, so you will see it’s represented; an array of lots and lots of numbers represented the word. But why do this? Well, think about it this way. If you ask a computer to come back with a similar word to “food,” you wouldn’t really expect it to come back with “burger” or “pizza,” right? That’s what a human might do when thinking of similar words to “food.”

A computer would more likely look at the word lexicographically, like when you scroll through a dictionary and come back with “foot,” for example. This is kind of useless to us. We want to capture a word’s semantic meaning. So, the meaning behind the word. Text embeddings do essentially that, thanks to the data captured in this super long array.

Now, I can find words similar to “food” in a large corpus by comparing text embedding to text embedding and returning the most similar ones. So words such as “burger” instead of “foot” will be more similar.

To create a text embedding of a word or even a whole sentence, check out the create embedding API here from OpenAI.

Okay, great. Now that we’ve covered all of these advanced topics, I hope you’ve enjoyed this course on prompt engineering and gained valuable insights into how to use ChatGPT and other AI models effectively.

Conclusion: Mastering Prompt Engineering Strategies

Now that we have established a strong foundation, you are poised to delve deeper into prompt engineering. Through this course, you will gain mastery in crafting prompts that elicit perfect responses from AI, whether it be ChatGPT or other LLMs. Join me on this exhilarating educational journey, and let’s embark on this exciting quest together!

Discover more from Digital Wealth Guru

Subscribe to get the latest posts sent to your email.

Comments